Chapter 2: Bits And Data Types

Data representation and operations in a computer system.

Bits #

Binary digits (or bits) are the primitive unit of information in a computer system.

A bit is essentially the existence or non-existence of a voltage in a computer; any voltage which is measured close to zero(0) means a non-existent signal while anything bigger than one(1) means the existence of a signal.

A data type provides the context on what a string of bits represent ie. an integer, character code, or floating point number.

Representations #

Integers #

A data type that represents the number zero, positive, and negative whole numbers.

Unsigned Integers #

Represents positive integers and zero only.

For some number of bits , there can be to unsigned integers to be represented.

For example, if there are three(3) bits, then it can represent the unsigned integers to .

| Bit | Decimal |

|---|---|

| 000 | 0 |

| 001 | 1 |

| 010 | 2 |

| 011 | 3 |

| 100 | 4 |

| 101 | 5 |

| 110 | 6 |

| 111 | 7 |

Signed Integers #

There are three main ways to represent signed integers, these are namely:

Signed Magnitude #

Represents integers by reserving the left-most bit (also known as the sign bit) as an indicator of whether it is a positive or negative number.

For a given positive non-zero integer , there is a total of to represent the magnitude of numbers in this representation.

For example if , then there are total numbers to represent the positive or negative integers.

| Bit | Decimal |

|---|---|

| 000 | 0 |

| 001 | 1 |

| 010 | 2 |

| 011 | 3 |

| 100 | -0 |

| 101 | -1 |

| 110 | -2 |

| 111 | -3 |

1's Complement #

Represents integers by complementing the bits: inverting the to a and vice versa.

This representation also uses a sign bit to indicate whether it is positive or negative.

For example, to get the 1's complement of the decimal number , we first get its bit representation which is , then complement the bits which would result in .

| Bit | Decimal |

|---|---|

| 000 | 0 |

| 001 | 1 |

| 010 | 2 |

| 011 | 3 |

| 100 | -3 |

| 101 | -2 |

| 110 | -1 |

| 111 | -0 |

2's Complement #

Represents integers the same way as the 1's Complement (ie. inverting the values), but has an extra step of adding a to the result.

In this representation, the sign bit represents .

For example to represent the decimal number we first get its 1's Complement which is , then add which results in :

| Bit | Decimal |

|---|---|

| 000 | 0 |

| 001 | 1 |

| 010 | 2 |

| 011 | 3 |

| 100 | -4 |

| 101 | -3 |

| 110 | -2 |

| 111 | -1 |

Bit Integer Arithmetic #

Addition #

Given 4-bits, adding as unsigned integers results in:

Subtraction #

Given 4-bits, adding as signed integers results in:

Bit Shifting #

A property of binary arithmetic is that adding a number by itself shifts the bits to the left ie. , this is simply because the value is multipled by [a multiple of] :

Corollary, dividing the bits by [a multiple of] shifts it to the right:

Sign Extension #

Two bit strings with differing lengths can be processed by extending the sign bit of the smaller length bit string.

For example, given the number as a 5-bit integer and the number as a 3-bit integer:

- Represent in binary:

- Represent in binary:

- Extend the sign-bit of to 5-bits:

- Now add:

Overflow/Underflow #

Since computers work with a finite length of bits, there comes a situation where processing bits would result in an error such as an overflow or underflow of values.

An overflow result would be when, for example, adding two signed integers goes over the maximum magnitude.

For example on a 5-bit string which has a maximum positive integer of , adding two positive integers would result in a negative integer, for example .

An underflow is the same but results in a positive integer if two negatives are processed and go over the minimum negative integer.

Bit Logical Operations #

NOT (Complement) #

Returns if the given value is and vice versa.

| A | NOT |

|---|---|

| 0 | 1 |

| 1 | 0 |

AND #

For two given bits and , the operator returns a iff. both and are , otherwise.

| A | B | AND |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

AND is commonly used for bit-masking: the retrieval or extraction of specific information in a bit string.

For example, it can be used to determine if a 2's complement bit string is odd:

3 & 1 = 1; // Odd => 011 & 001 = 001 = 1

2 & 1 = 0; // Even => 010 & 001 = 000 = 0OR #

For two given bits and , the operator returns a iff. either or are , if both and are otherwise.

| A | B | OR |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

XOR (Exclusive OR) #

For two given bits and , the operator returns a iff. either or are but not both.

| A | B | XOR |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

XOR can be used to check if two bit strings are the same.

De Morgan's Law #

States two properties of composed logical operators:

- The complement of is equivalent to .

| A | B | NOT(A) | NOT(B) | NOT(A) AND NOT(B) | NOT(NOT(A) AND NOT(B)) | A OR B |

|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 0 | 1 | 1 | 0 | 0 | 1 | 1 |

| 1 | 0 | 0 | 1 | 0 | 1 | 1 |

| 1 | 1 | 0 | 0 | 0 | 1 | 1 |

- The complement of is equivalent to .

| A | B | NOT(A) | NOT(B) | NOT(A) OR NOT(B) | NOT(NOT(A) OR NOT(B)) | A AND B |

|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 0 | 1 | 1 | 0 | 1 | 0 | 0 |

| 1 | 0 | 0 | 1 | 1 | 0 | 0 |

| 1 | 1 | 0 | 0 | 0 | 1 | 1 |

Floating Point #

Is a form of scientific notation that represents Real numbers in a finite set of bits.

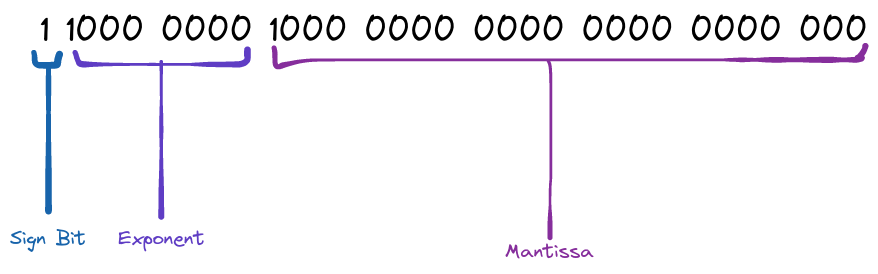

The most widely adopted standard to represent floating point arithmetic is the IEEE-754 standard where in a 32-bit system:

- The left-most bit is the sign bit.

- The next 8 bits are the exponent which is calculated by subtracting the excess.

- And the remaining 23 bits are the mantissa.

Notation:

Where:

- S is the sign bit.

- M is the mantissa.

- E is the exponent derived from some bit string that is subtracted to (the excess number). For example, if the exponent is , then the bit string to represent this in floating point notation is .

To convert a Real number to a floating point:

- Convert the significands on the left and right sides of the radix (decimal point):

- The left-side is derived by continuously dividing the value by 2 and retrieving the remainders.

- The right-side is derived by continuously multiplying the value by 2 and retrieving the value on the right hand side of the result. This should have repeating patterns.

- Move the decimal point either left or right to get the exponent.

ASCII #

Represents a character with a unique integer in a single byte.